The first major point of this paper is that the joint distribution of the true null p-values is a highly informative property to consider, whereas verifying that each null p-value has a marginal U(0,1) distribution is not as directly informative.

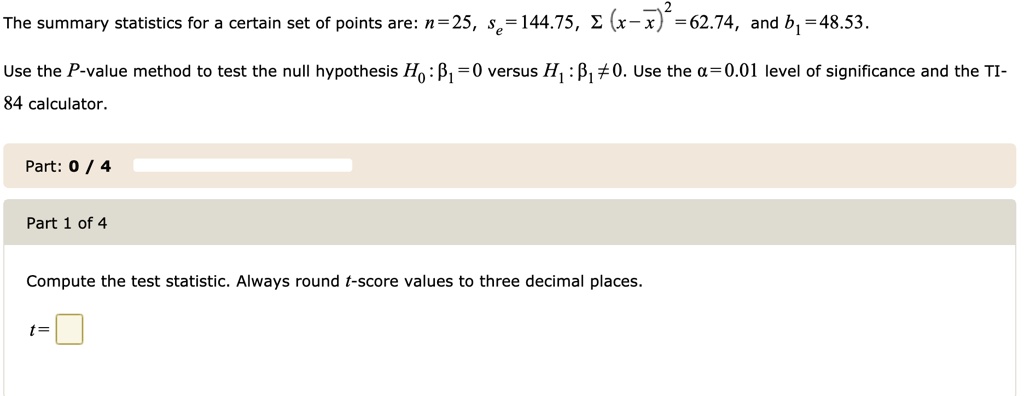

We provide two key examples to illustrate this point in the following section, both of which are commonly encountered in high-dimensional biology applications. Because of this, it is not possible to simply extrapolate the definition of a correct p-value in a single hypothesis test to that of multiple hypothesis tests. However, when performing multiple tests, the situation is more complicated for several reasons: (1) among the entire set of hypothesis tests, a subset are true null hypotheses and the remaining subset are true alternative hypotheses, and the behavior of the p-values may depend on this configuration (2) the data from each true null hypothesis may follow a different null distribution (3) the data across hypothesis tests may be dependent and (4) the entire set of p-values is typically utilized to make a decision about significance, some of which will come from true alternative hypotheses. Just as with a single hypothesis test, the behavior under true null hypotheses is the primary consideration in defining well behaved p-values. Until now there has been no analogous criterion when performing thousands to millions of tests simultaneously. (We hereafter abbreviate this distribution by U(0,1).) This property allows for precise, unbiased evaluation of error rates and statistical evidence in favor of the alternative. A fundamental property of a statistical hypothesis test is that correctly formed p-values follow the Uniform(0,1) distribution for continuous data when the null hypothesis is true and simple. In hypothesis testing, a test statistic is formed based on the observed data and then it is compared to a null distribution to form a p-value. Simultaneously performing thousands or more hypothesis tests is one of the main data analytic procedures applied in high-dimensional biology ( Storey and Tibshirani, 2003). We utilize the new criterion and proposed diagnostics to investigate two common issues in high-dimensional multiple testing for genomics: dependent multiple hypothesis tests and pooled versus test-specific null distributions. Multiple testing p-values that satisfy our new criterion avoid potentially large study specific errors, but also satisfy the usual assumptions for strong control of false discovery rates and family-wise error rates. Here, we propose a criterion defining a well behaved set of simultaneously calculated p-values that provides precise control of common error rates and we introduce diagnostic procedures for assessing whether the criterion is satisfied with simulations. On the other hand, there are cases where each p-value is marginally incorrect, yet the joint distribution of the set of p-values is satisfactory. We show here that even when each p-value is marginally correct under this single hypothesis criterion, it may be the case that the joint behavior of the entire set of p-values is problematic. Classical statistics provides a criterion for defining what a “correct” p-value is when performing a single hypothesis test. In this setting, a large set of p-values is calculated from many related features measured simultaneously. The evidence shows that the mean of the final exam scores for the online class is lower than that of the face-to-face class.Simultaneously performing many hypothesis tests is a problem commonly encountered in high-dimensional biology. H a: μ 1 H 0: μ 1 = μ 2 Null hypothesis: the means of the final exam scores are equal for the online and face-to-face statistics classes.In order to account for the variation, we take the difference of the sample means, \displaystyle\overline

Very different means can occur by chance if there is great variation among the individual samples. A difference between the two samples depends on both the means and the standard deviations. The comparison of two population means is very common. The degrees of freedom formula was developed by Aspin-Welch. Note: The test comparing two independent population means with unknown and possibly unequal population standard deviations is called the Aspin-Welch t-test.

0 kommentar(er)

0 kommentar(er)